Force

Dec 2015 - Jul 2020

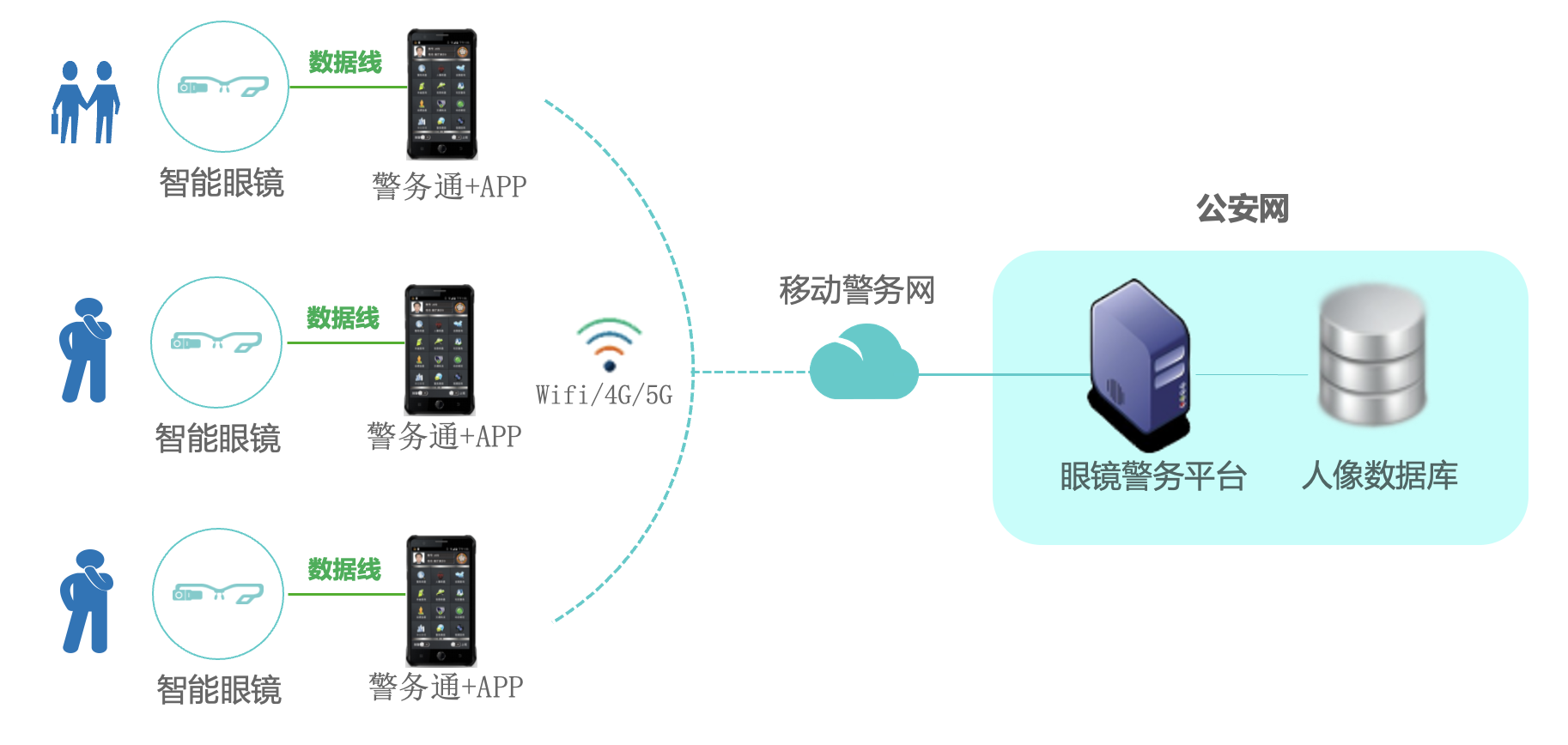

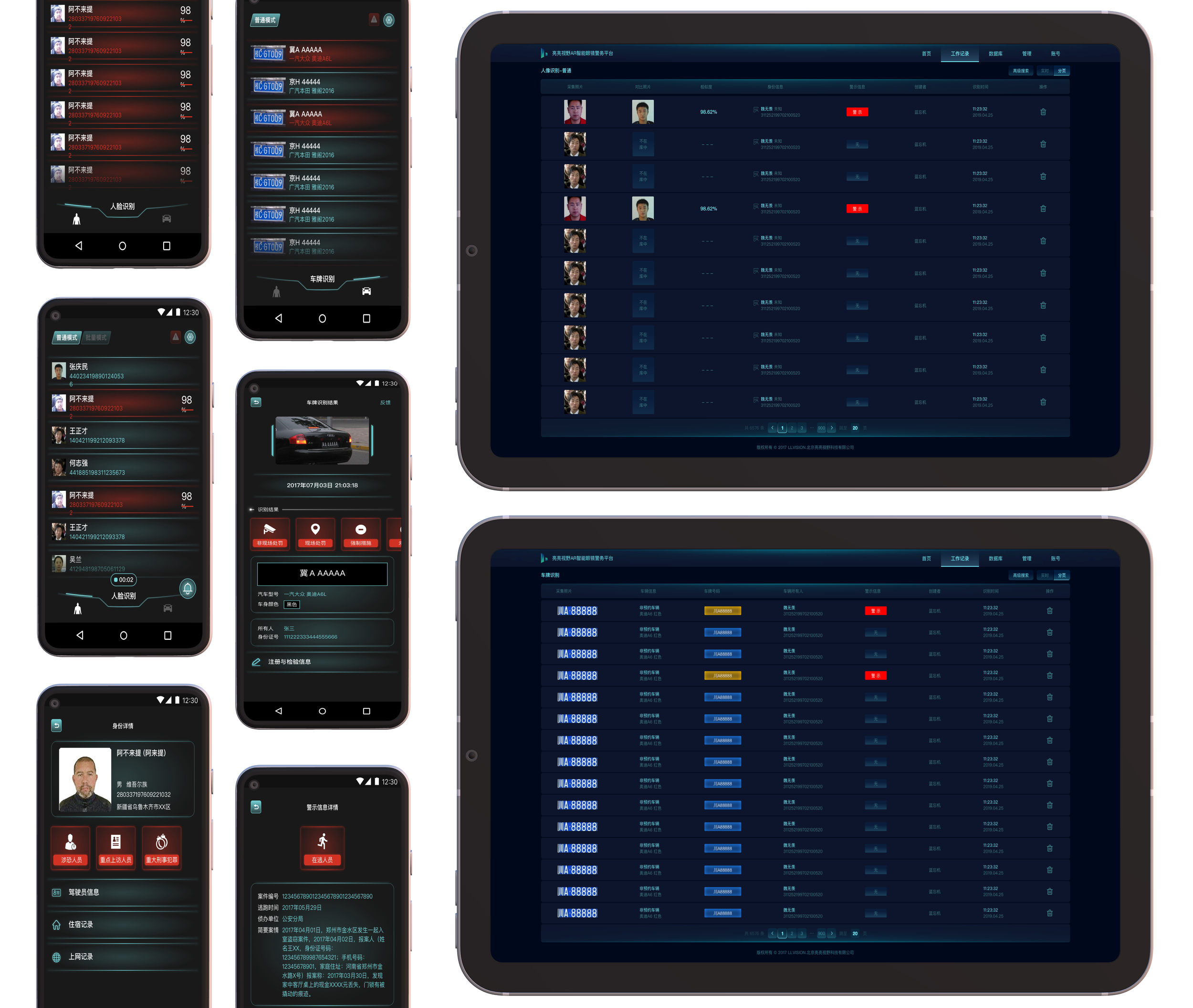

FORCE is the world's first mobile surveillance solution recognized by police and security associations. Users can complete intelligent identification of criminals, key personnel, and vehicles licenses through the AR glasses' camera. The identification results and networked command and dispatch information are displayed on the AR glasses' screen. Platform users' data can be aggregated in the backend for unified management and synchronized with the databases and command systems of public security or security agencies.

Contributions

Project management, product definition, market analysis, user research, UX and UI design, system and algorithm design

Design&Developing Tools

Figma, Sketch, Adobe After Effects, Xmind

opportunities Analysis:

Market & Technology

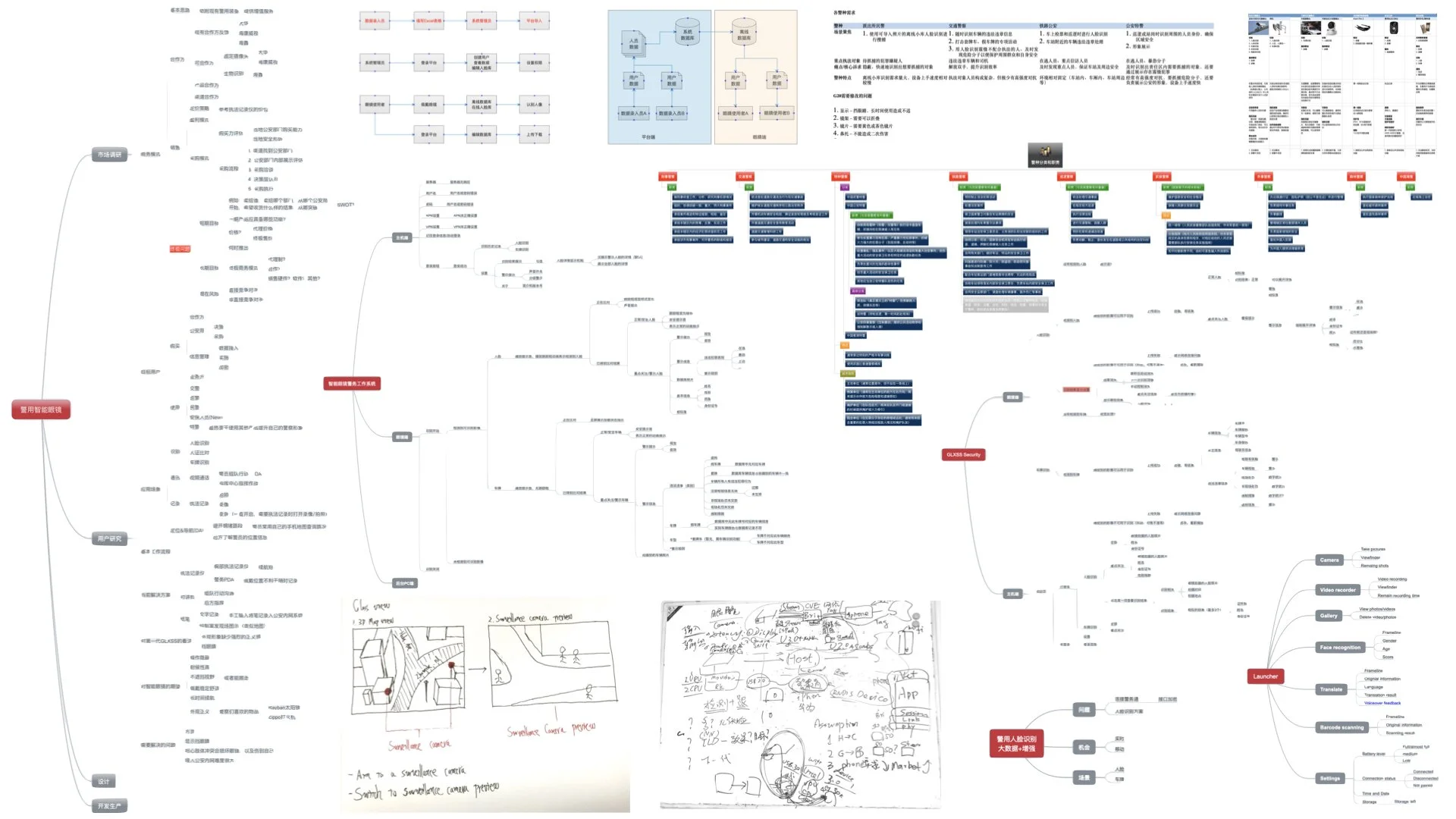

In early research across various industries, we discovered significant commercial potential in the police and security market. This market is large in scale, possesses strong purchasing power, has a good technological foundation for integrating AR, and both frontline users and management have clear pain points.

After conducting in-depth field research with frontline police officers and other public safety personnel, we spent several months following potential users from various police divisions. Through this process, we gained a deep understanding of their workflows, organizational structures, equipment, facilities, and operational pain points.

Ultimately, we identified two scenarios with well-defined user pain points, high commercial value, and strong technical feasibility:

Public Security in Public Places In places like airports, train stations, tourist attractions, and festival venues, police need to focus on identifying individuals with records of trafficking, drug dealing, and theft. However, these locations have large crowds, and most people are usually on the move, making identification challenging.

Traffic Enforcement During vehicle crackdown operations and inspections of illegal and violating vehicles in specific areas, it is difficult for police officers to hold smartphones for long periods to identify license plates and drivers due to hand fatigue.

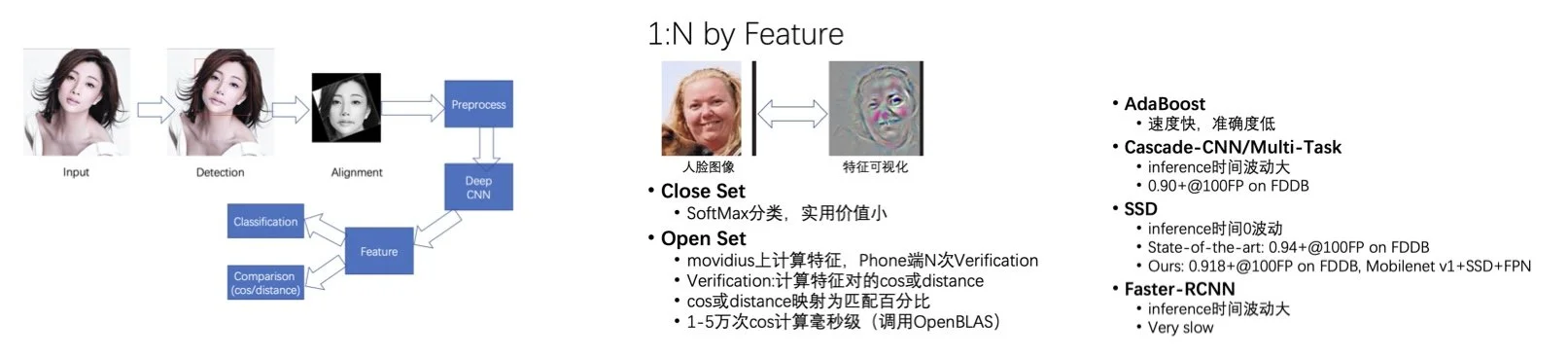

At the same time, we also observed advances in models like TensorFlow and Caffe. In 2016, facial recognition algorithms could only achieve "1-to-1 face matching" between a face and an ID photo on low power consumption platforms like AR glasses, even worse, it taks several seconds to recognize, and the accuracy is not very good. However, just a year later, our demo could compare faces captured by the AR glasses' camera with hundreds of faces in a local model database, with recognition speeds improving to around 2 seconds, and accuracy is improved dramatically (from approx. 75% to over 95%). Given that mature commercialization requires a face database of thousands, we decided to collaborate with online facial recognition platforms initially.

Define The Product

Based on the findings from the above research, we defined the key features of the product:

Users can utilize the AR glasses' camera for facial and license plate recognition, with the process being automated to minimize manual operation.

The recognition capabilities of the AR glasses should function in low light, during slow movement, and at relatively long distances.

The recognition results displayed by the AR glasses should be concise and clear, presenting key alert information without excessively distracting the user from the real world.

The AR glasses need to synchronize recognition results, on-site images, timestamps, and location information to the server for archival purposes.

Efforts should be made to reduce network bandwidth and concurrency requirements to minimize the cost of network services and servers.

The battery life and comfort of the AR glasses should ensure at least 2 hours of continuous use by the user.

Considering the development complexity and the investment-return ratio for the product, we decided to gradually complete the development of single face recognition, license plate recognition, multi-face recognition, and offline/online hybrid recognition over two years following project initiation. This will be done through the iteration of several dozen minor versions and two major versions.

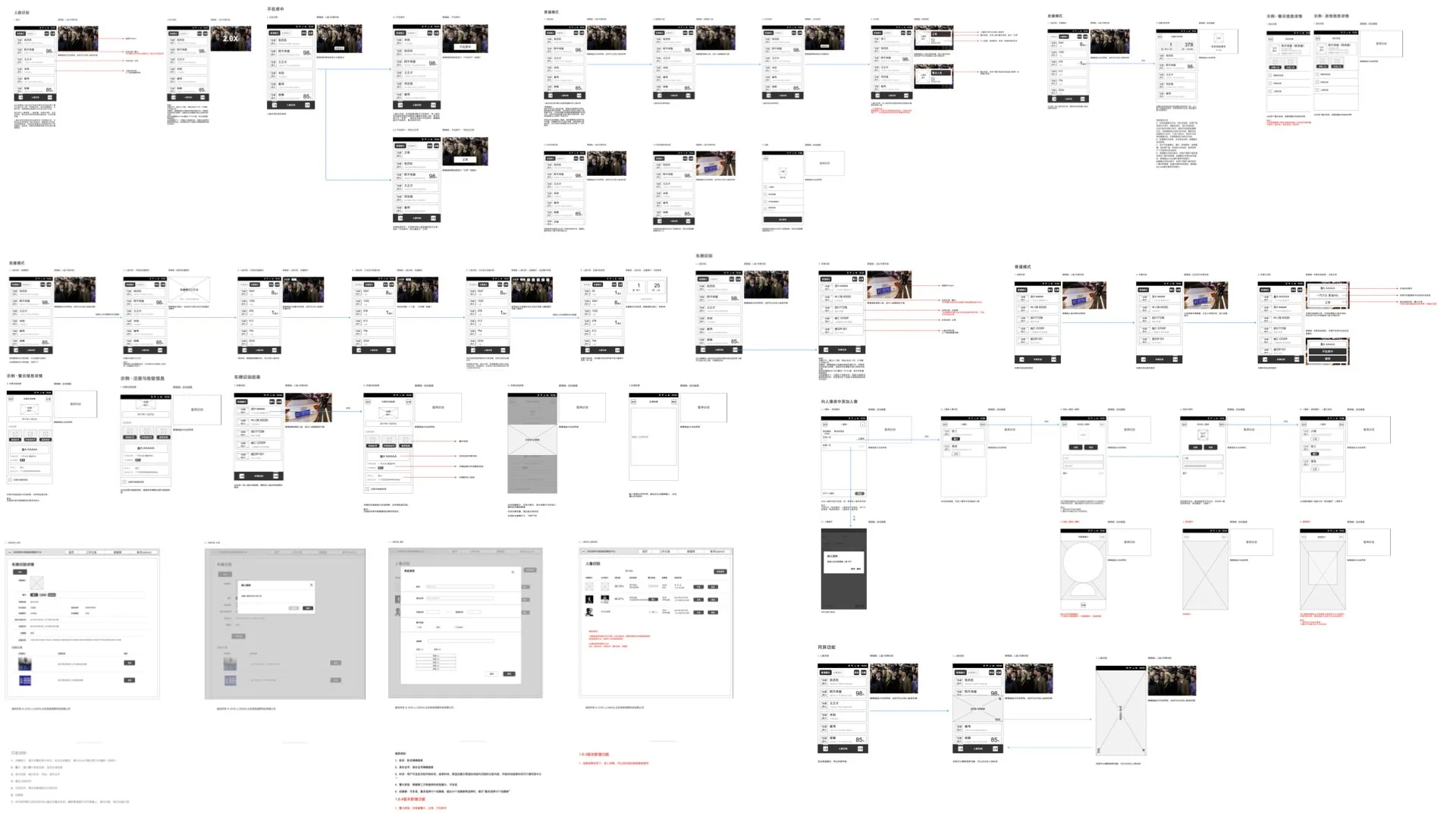

Automatic Recognition

When the facial or license plate recognition feature is enabled, the AR glasses' camera continuously feeds each frame to the algorithm for analysis. Upon detecting a face or license plate, the AR glasses compare the captured image with the database and display the matching result. To prevent the camera from mistakenly recognizing faces and license plates outside the user's focus area, the camera captures video in a 16:9 aspect ratio with a default diagonal field of view of 72°. The recognition results will disappear after 4 seconds and automatically initiate a new recognition process.

Optimize Performance

We have made extensive performance optimizations for the AR glasses RTOS and accompanying app of the FORCE.

Our first challenge was to reduce the network concurrency and traffic pressure for online recognition. If we used a traditional server-side algorithm to analyze video streams, each AR glasses would need to transmit high bitrate 1080P video at several frames per second to the server, resulting in enormous traffic consumption and extremely high server costs. After two years of technical exploration, we are confident that the AR glasses can leverage their onboard VPU (Vision Processing Unit) to handle some of the preliminary work, thereby alleviating the network and server-side burden. We ultimately decided to run the face detection and tracking algorithms on the VPU of the AR glasses, converting the results into encrypted feature value strings that the server-side algorithms can parse. This approach significantly enhances the server's concurrency capacity, increasing it by hundreds of times compared to the conventional video stream recognition method.

Another major challenge arose from early user feedback: with the default 72° diagonal FOV of the early algorithms and camera, the AR glasses could only recognize faces at a distance of around 2 meters. This fell short of the users' frequent need to identify targets at distances of over 5 meters. To address this, we first optimized the algorithm to reduce the portrait capture requirement from 128x128 pixels to 80x80 pixels without significantly sacrificing recognition accuracy. Additionally, by optimizing the ISP, we implemented a "Crop FOV" technique on the camera CMOS, which fully utilized all pixels within a limited area. This added 45° and 30° camera FOV options. As a result, the recognition distance for faces increased from 2 meters to 7.8 meters, and for license plates from less than 5 meters to 18 meters.

As the product became more user-friendly, users started utilizing it in overcast weather, indoors, and even at night. Due to increased camera noise and the slower shutter speeds required for exposure, the AR glasses struggled to capture clear images of faces and license plates for recognition. To address this, we optimized the camera's noise performance and significantly adjusted the exposure curve. This ensured that both the user and the subject being recognized only needed to maintain a brief moment of relative stillness for successful recognition. The AR glasses is designed to prefer using higher shutter speed with higher ISO to avoid motion blur. However, to guarantee the user experience, if the exposure value is too low (gray area in the chart), the device will neither increase ISO nor decrease the shutter speed for correct exposure, it will notify the user to add artificial lighting instead.

In addition to that, We've meticulously calculated and tested the depth of field coverage of the AR glasses' primary camera. Working closely with our manufacturing partners, we've fixed the primary camera's focus at approximately 1.6 meters, effectively turning it into a "fix focus" camera. This eliminates the need for the camera to autofocus, saving time.

When FORCE began large-scale use in 2018, users quickly brought it to crowded places such as airports, train stations, tourist attractions, and industrial parks. They also requested the addition of a feature to simultaneously detect and recognize multiple faces with the AR glasses. After on-site investigations, we found that a police officer or security personnel typically encountered up to five faces or three license plates in front of them, making this feature feasible from a computational standpoint. Additionally, since users were not focusing more attention on a single target, we decided to remove the power-consuming tracking algorithm and instead used the detection algorithm to capture images of faces and license plates. Duplicate recognition results were merged in the backend data management system. Ultimately, the product achieved high-speed, automatic detection and recognition of targets in front of the user.

Display Recognition Results

Due to the limitations of AR optical technology at the time, we could only provide users with a 640x400 pixel resolution display with a diagonal field of view (FOV) of only 20°, while ensuring comfort and reasonable cost. Since most of the user's attention was not focused on this screen, presenting recognition results efficiently became a challenge.

After carefully collecting feedback from different users, we identified the key information that needed to be displayed in recognition results for various scenarios: database photos of faces or license plates, identity information, and details of warning messages. Further analysis revealed that the most crucial element was the warning information, which could be in one of three states: warning, normal, or unknown. Based on this, I designed the following face and license plate recognition result animations.

Users can quickly determine the type of warning through this design and read the key information within just a few seconds. To further optimize the experience, I also added audio cues to the recognition results and synchronized the timing as closely as possible with the animation.

Online + Offline

By 2019, the Caffe-based algorithm models had been optimized from several hundred megabytes in 2016 to under 10 megabytes, enabling mobile platforms to store and process data for up to 100,000 faces. This advancement meant that many small and medium-sized enterprises could perform basic face and license plate recognition using only AR glasses and smartphones, without the need to purchase expensive servers. Even for police forces with online recognition platforms, offline recognition provided an additional option for users in poor network conditions or when faster recognition was needed. Consequently, we redesigned the collaboration between offline and online recognition processes, ensuring compatibility without significantly increasing computational load.

The offline/online hybrid recognition system operates as follows:

Upon generation of the offline recognition result, it is immediately displayed on the AR glasses.

Once the online recognition result is available, if it aligns with the offline result, no further display is necessary. However, if there's a discrepancy, the online result is immediately displayed on the AR glasses, overwriting the offline recognition record in the mobile app.

When cellular network is unavailable, only the offline recognition algorithm is employed, and its results are displayed on the AR glasses.

Data Management

When the collected face and license plate data are synchronized with the backend, the server must not only return online recognition results but also present these results efficiently. By implementing logical functional partitions and color-coded markers, platform administrators can quickly locate the information they are interested in within the vast amount of data.

Use Cases

FORCE has been successfully implemented in diverse scenarios such as law enforcement, public safety, enterprise security, and hospitality, covering various provinces in China and numerous other countries.